Developers often talk about improving our ability to write code. Few of us talk about getting better at reading code. I believe that just as we can improve at reading fiction and non-fiction via various techniques; we can do the same for our code-reading faculties. And just as we can judge reading quality by looking at speed, comprehension and syntopical understanding, we can apply the same metrics to reading code. Given that I probably read around 3 times more code than I write, this is an area I definitely want to work on.

In this article I will explore some techniques and tactics I use when diving into a new codebase. I chose github.com/tidwall/wal as an example. This is a small Golang library that implements write-ahead logging. Write Ahead Logs are used to provide data integrity in applications. Such applications typically write undo/redo data or some other state into the log before performing an actual change (hence the name). Note that before this exercise I had never given any thought to the design and implementation of write-ahead logs.

My three guidelines when reading code

- Examine the API / public interfaces/

- If possible, play around with the exposed interfaces of the code in question.

- If not possible, look at the unit tests. When there is little or no documentation, unit tests can tell us how the author meant for the library to be used.

- When looking at internals, always look at the data structures first. I learnt this from https://jameskoppelcoaching.com/advanced-software-design-web-course, which I highly recommend

Diving in

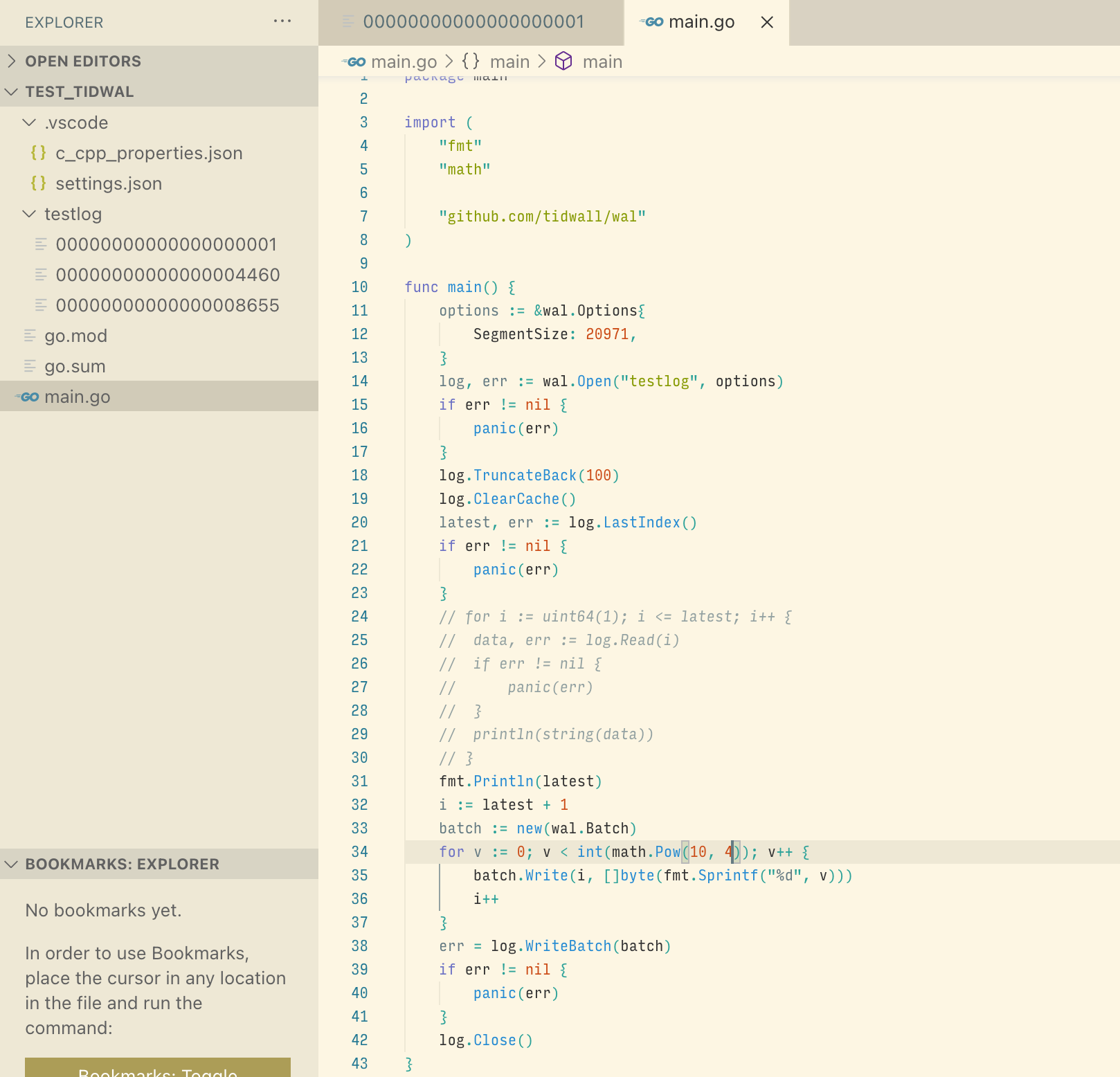

So the first thing I will do is to create a small program to call the library:

Right off the bat, this lets me see how the logs are being stored on disk. Interesting! So we see that a log is stored in a single directory, spread across files with numbered filenames. Calling the truncate function sometimes causes these files to be deleted.

I’m satisfied with the results of my experimentation, so I’ll skip looking at the unit tests.

Next thing is to look at the code. In this smallish library, the key file to look at is wal.go. The author has nicely left the core data structures at the top of the file, along with some commentary:

// Log represents a write ahead log

type Log struct {

mu sync.RWMutex

path string // absolute path to log directory

opts Options // log options

closed bool // log is closed

corrupt bool // log may be corrupt

segments []*segment // all known log segments

firstIndex uint64 // index of the first entry in log

lastIndex uint64 // index of the last entry in log

sfile *os.File // tail segment file handle

wbatch Batch // reusable write batch

scache tinylru.LRU // segment entries cache

}

// segment represents a single segment file.

type segment struct {

path string // path of segment file

index uint64 // first index of segment

ebuf []byte // cached entries buffer

epos []bpos // cached entries positions in buffer

}

Just from examining the data structures we can already see the following:

- This log file is divided into segments - so that’s what we saw earlier!

- Each segment starts at an index, and has an associated cache for the byte positions of every element index.

- The log itself has an associated cache with a Least-Recently-Used eviction policy.

- The log can be marked as corrupt - which probably means that there is some recovery mechanism.

At this point, if you have a specific question you want answered from the code, you can dive into the function internals. Personally, I want to find out why segmented file storage was chosen (instead of storing everything in a single file for instance).

The mutex catches my eye here, since it suggests a tradeoff between parallelism and safety. Looking at references to the mutex I can see that:

- Many operations including

Write*andTruncate*acquire a writer lock on the entire log before writing. - This indicates that writes are sequential - which makes sense since this is an append-only log.

At this point I want to know a bit more about segments; so I do a quick Google search. So apparently, one benefit of working with multiple segments (files) is that it becomes easier to truncate entries from the log. So it makes sense for us to a look at the Truncate* methods.

Cool! These methods are implemented with a pattern that is new for me: critical work is front-loaded into the initial portion of the function [sourcegraph]. Later on in the function, non-critical operations (deleting the unused segments, which can take a relatively long time) happen. If any error happens at this point of the function, the disk data is preserved and can be re-loaded correctly later on.

Lastly, lets look at the Read and load functions. This is where I find that reads are actually quite optimized. When loading a log, only the last segment is read from disk by default [sourcegraph]. Any segment that is not cached in memory will be read from disk on-demand (e.g. in loadSegment we see some of this caching behavior).

So here we are: an 1 hour later and a lot wiser :) Hope this article was useful - as usual, do reach out if you have any ideas, critiques or comments. One extra tip is to use an IDE(or else something like SourceGraph). Two features that make it much easier to explore a new code base are Jump To Reference and View References.